Hacking has entered into a new era where it is being used for graver purposes involving important government sectors, personal privacy and even human lives. Self-driven car hacking got a hot topic in 2015 when a group of hackers showed Chrysler how their self-driven vehicles can be hacked using internet and extensive programming. This resulted in huge costs and repercussions for Chrysler as it had to make a fix for its 1.4 million computerized jeeps.

However, now the new revelation has made matters worse for the automotive industry. After remote car hijacking and even stealing of self-driven cars was made possible by complex framework provided by hackers, the new revelation makes it possible for even the non-technical population to be able to harm and be a part of self-driven car hacking.

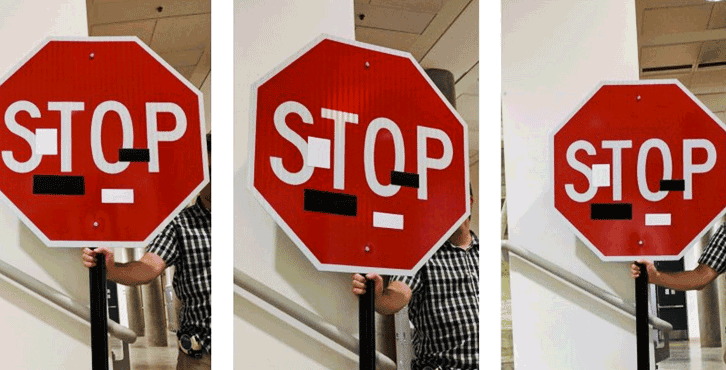

This latest revelation just requires a couple of stickers to be placed on the sign boards on roads and streets and this can confuse a self-driven car and cause a minor or major accident. In a research made by individuals at University of Washington, University of Michigan Ann Arbor, Stony Brook University, University of California Berkeley, and credit researchers, including Ivan Evtimov, Kevin Eykholt, Earlence Fernandes, Tadayoshi Kohno, Bo Li, Atul Prakash, Amir Rahmati, and Dawn Song., the simplicity of this process is demonstrated of how anyone can print few stickers at home and place them on road signs. The research proves that most self-driven and automatic cars can miss or confuse the sign boards and thus put human lives at stake.

The research paper from the university researchers named as Robust Physical-World Attacks on Machine Learning Models, it is shown how image recognition system on such cars fail when tricked and thus result in self-driven car hacking at the cost of a mere sticker!

A camera and a printer is all what is needed for this purpose. Two simple stickers of something written such as “STOP” on a signboard can be misread as a speed limit by the self-driven car. In the research paper, the researchers quoted:

“We [think] that given the similar appearance of warning signs, small perturbations are sufficient to confuse the classifier,” the researchers told Car and Driver. “In future work, we plan to explore this hypothesis with targeted classification attacks on other warning signs.”

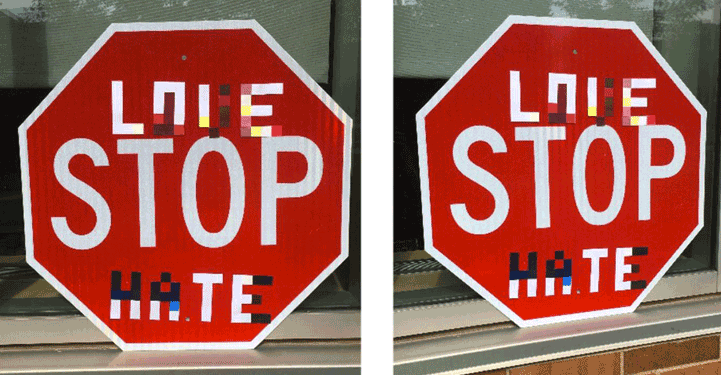

Most of the tests were made on the STOP signboard as it is one of the most vital ones in traffic handling. If a car fails to recognize a STOP sign, it might lead to a disastrous crash at a junction. The tests included placement of words like “LOVE” and “HATE” on top and bottom of a STOP signboard and also included placement of small meaningless stickers randomly on STOP sign. In all cases, the car got confused.

The manufacturer of the car which was put to test is yet unknown but surely it puts all of us who wish to buy a self-driven car in near future in shear fear and doubt the credibility of self-driven cars.